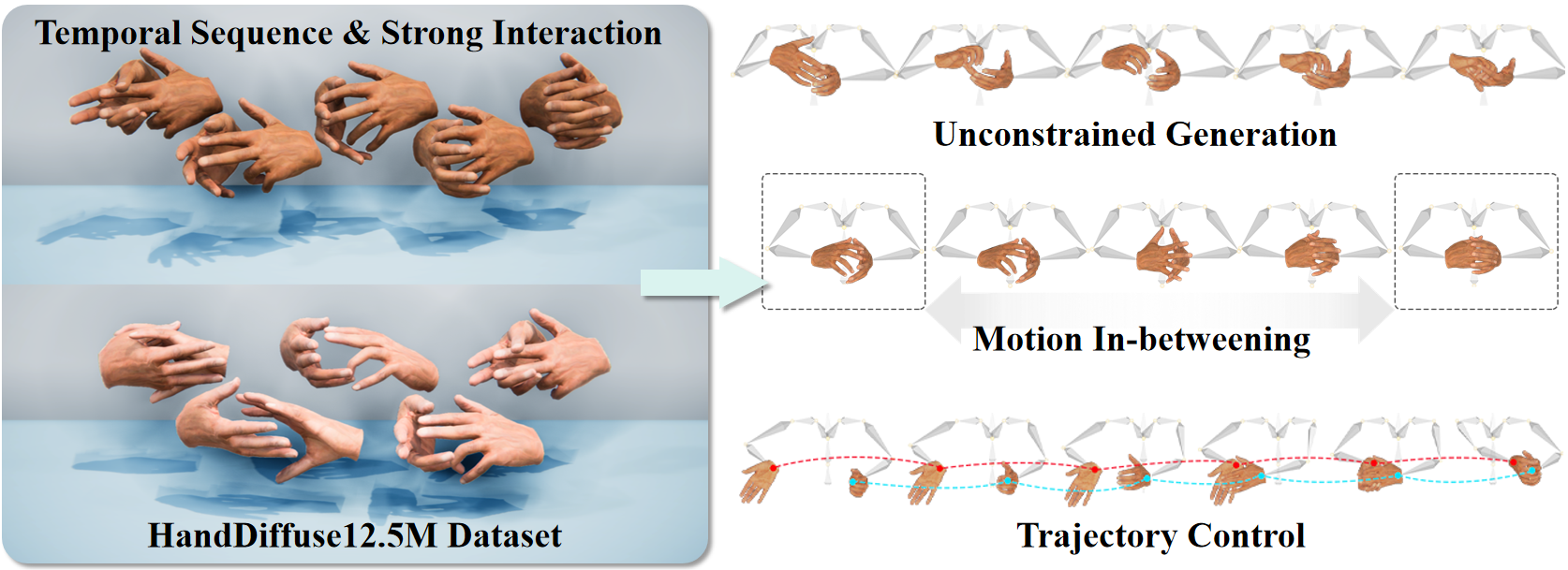

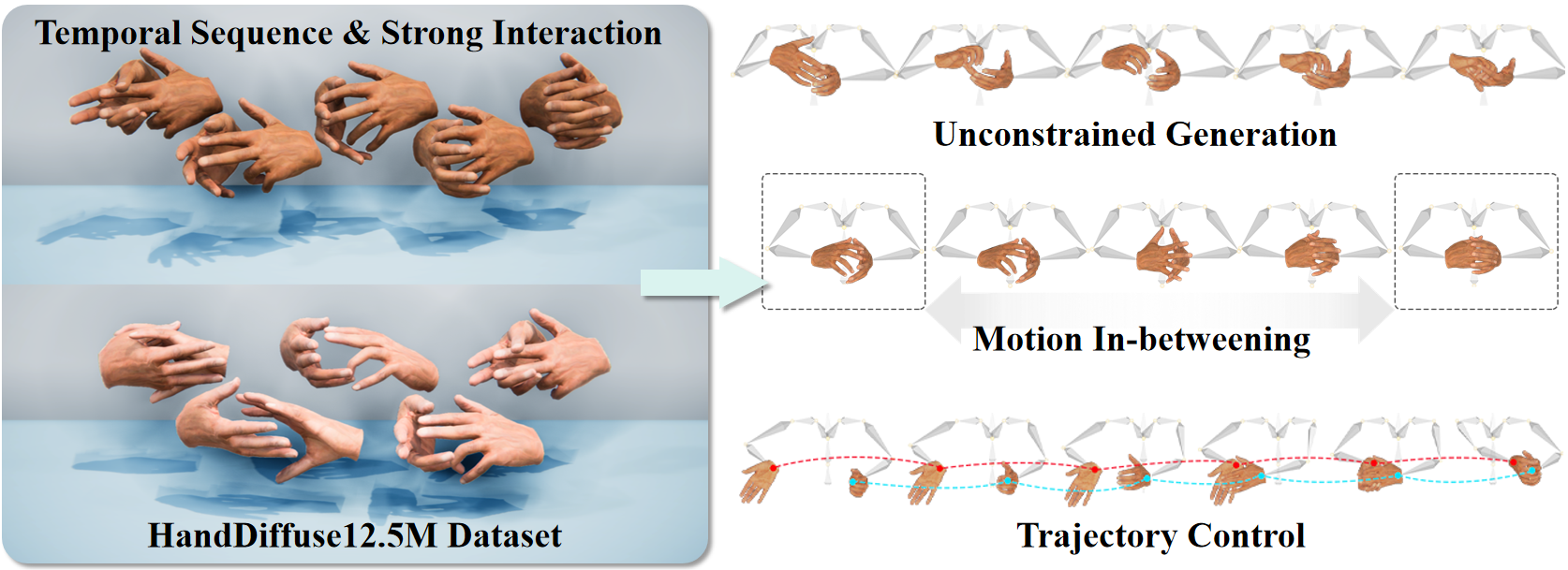

Existing hands datasets are largely short-range and the interaction is weak due to the self-occlusion and self-similarity of hands, which can not yet fit the need for interacting hands motion generation. To rescue the data scarcity, we propose HandDiffuse12.5M, a novel dataset that consists of temporal sequences with strong two-hand interactions. HandDiffuse12.5M has the largest scale and richest interactions among the existing two-hand datasets. We further present a strong baseline method HandDiffuse for the controllable motion generation of interacting hands using various controllers. Specifically, we apply the diffusion model as the backbone and design two motion representations for different controllers. To reduce artifacts, we also propose Interaction Loss which explicitly quantifies the dynamic interaction process. Our HandDiffuse enables various applications with vivid two-hand interactions, i.e., motion in-betweening and trajectory control. Experiments show that our method outperforms the state-of-the-art techniques in motion generation and can also contribute to data augmentation for other datasets. Our dataset, corresponding codes, and pre-trained models will be disseminated to the community for future research towards two-hand interaction modeling.

@misc{lin2023handdiffuse,

title={HandDiffuse: Generative Controllers for Two-Hand Interactions via Diffusion Models},

author={Pei Lin and Sihang Xu and Hongdi Yang and Yiran Liu and Xin Chen and Jingya Wang and Jingyi Yu and Lan Xu},

year={2023},

eprint={2312.04867},

archivePrefix={arXiv},

primaryClass={cs.CV}

}